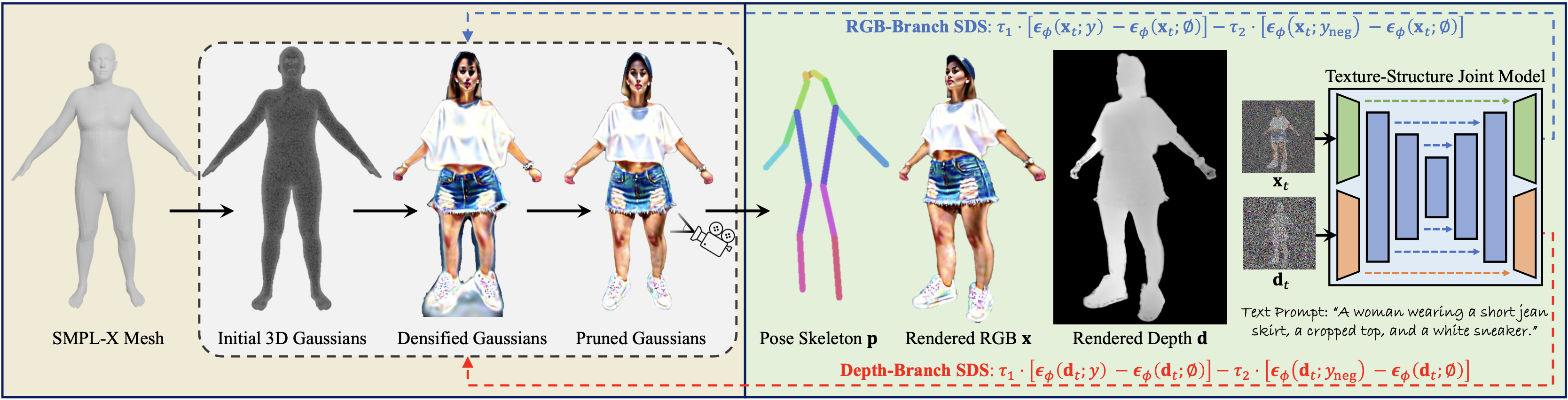

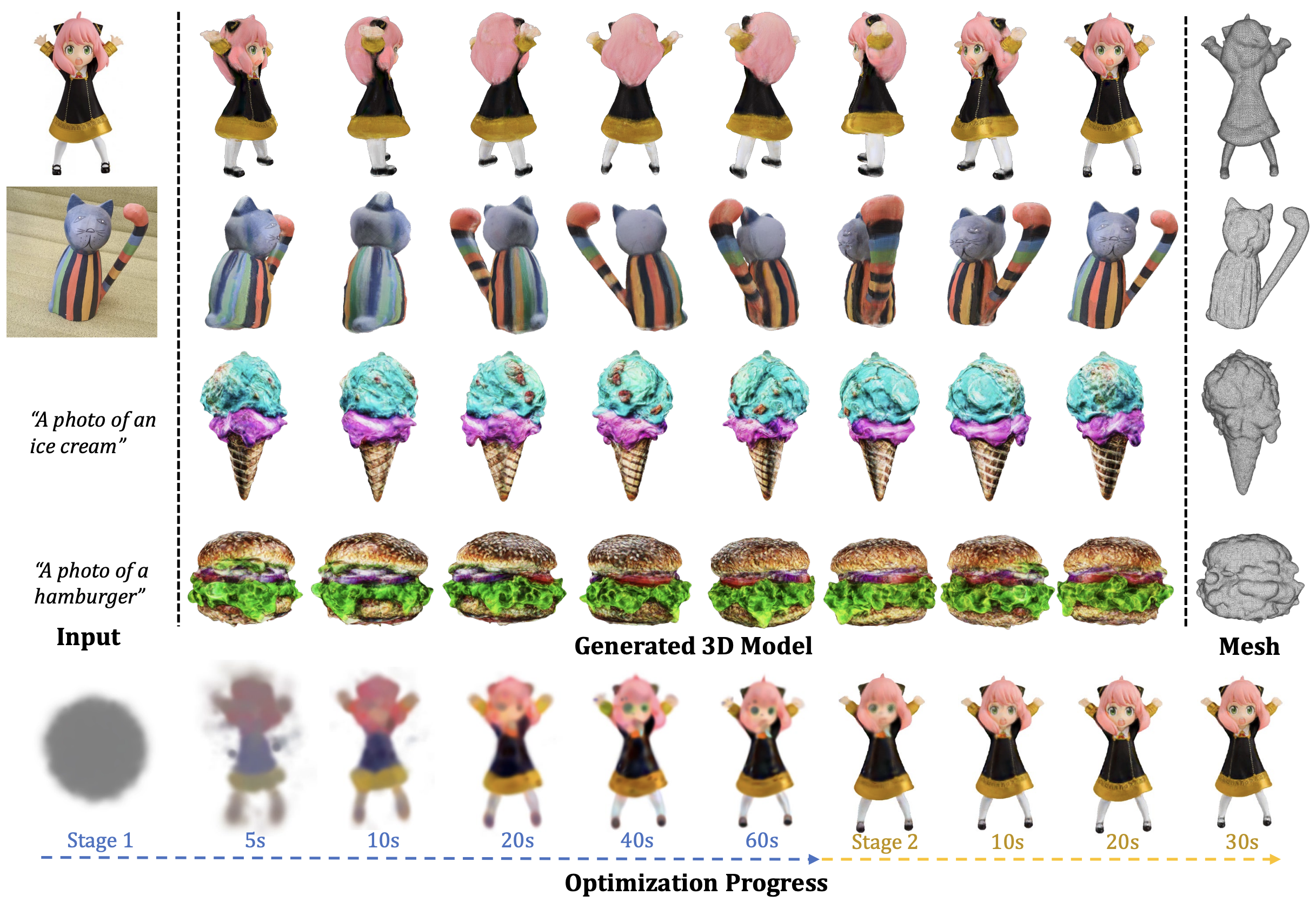

Overview of the proposed HumanGaussian Framework. We generate high-quality 3D humans from text prompts with the neural representation of 3D Gaussian Splatting (3DGS). In

Structure-Aware SDS, we start from the SMPL-X prior to densely sample Gaussians on the human mesh surface as initial center positions. Then, a Texture-Structure Joint Model is trained to simultaneously denoise the image

x and depth

d conditioned on pose skeleton

p. Based on this, we design a dual-branch SDS to jointly optimize human appearance and geometry, where the 3DGS density is adaptively controlled by distilling from both the RGB and depth space. In

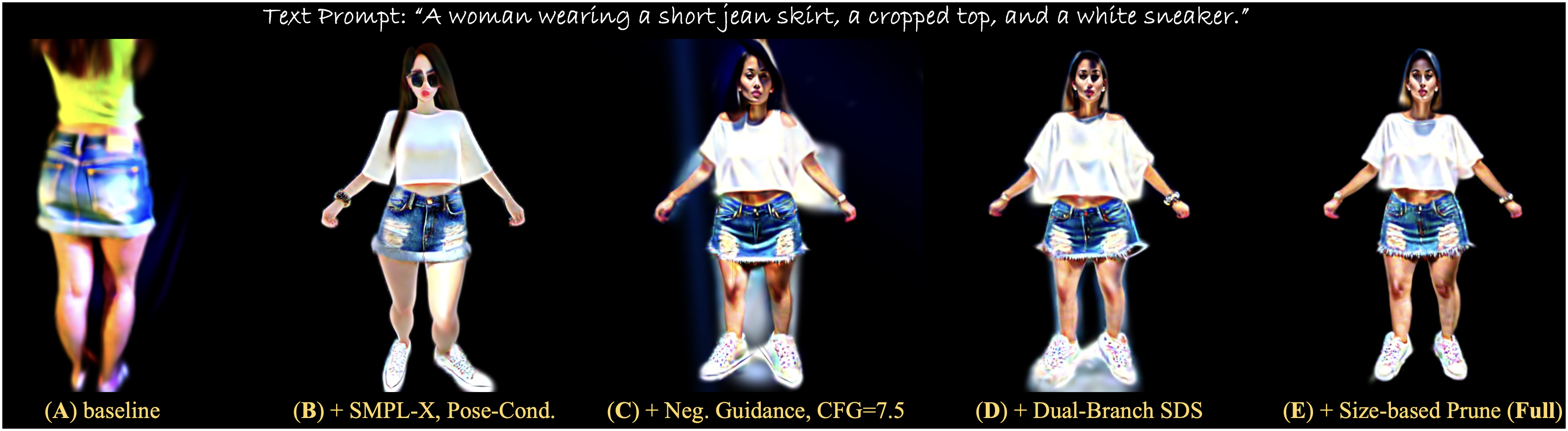

Annealed Negative Prompt Guidance, we use the cleaner classifier score with an annealed negative score to regularize the stochastic SDS gradient of high variance. The floating artifacts are further eliminated based on Gaussian size in a prune-only phase to enhance generation smoothness.

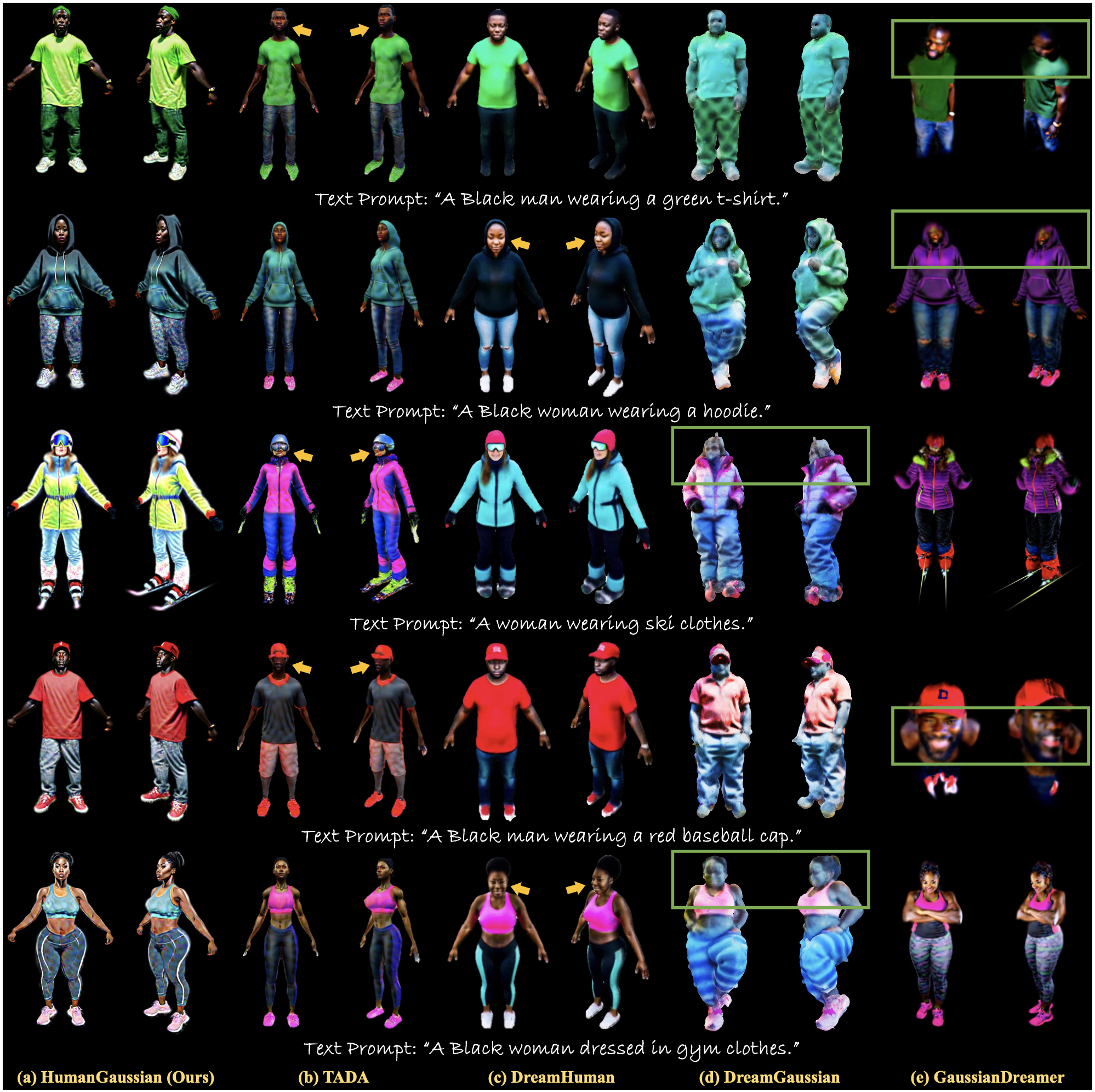

Comment: The first work that adapts Gaussian Splatting to the Text-to-3D generation problem.